Breaking down India's AI opportunity

Everything about building AI from India for the world.

A few weeks ago, we sat down with Hemant Mohapatra, Partner at Lightspeed India, former Google and AMD executive, and investor in SarvamAI, India’s sovereign AI model, to discuss AI. Should we build a foundational model? What’s our right in the vast AI world? Have we already missed the train?

This is our attempt to distill our 1.5-hour-long conversation into a concise 10-minute read, while also adding our own research to detail the Indian AI opportunity.

In case you missed the last edition, we recently launched GrowthX Host Club, a club of the country’s most ambitious leaders who care. Hemant is bringing back Cubbon Walks with GrowthX as part of our October curation. Check out his event and all upcoming events HERE.

The Indian government is investing in AI.

Last year, the Ministry of Electronics & Information Technology (MeitY) launched the India AI mission, allocating $1.25 billion to three important projects — AI model development, building a national computer grid, and launching AI innovation centres.

Since then, the government has received a staggering 506 foundational model proposals, 43 of which are just for large language models (LLMs). It seems like a lot of us want to build an OpenAI alternative — but should we?

Should Indian builders build an OpenAI competitor?

“Companies should exist to solve a need — is the world dying for another foundational model?” There are layers to this. Let’s look at them one by one.

First, AI’s distributive phase is near.

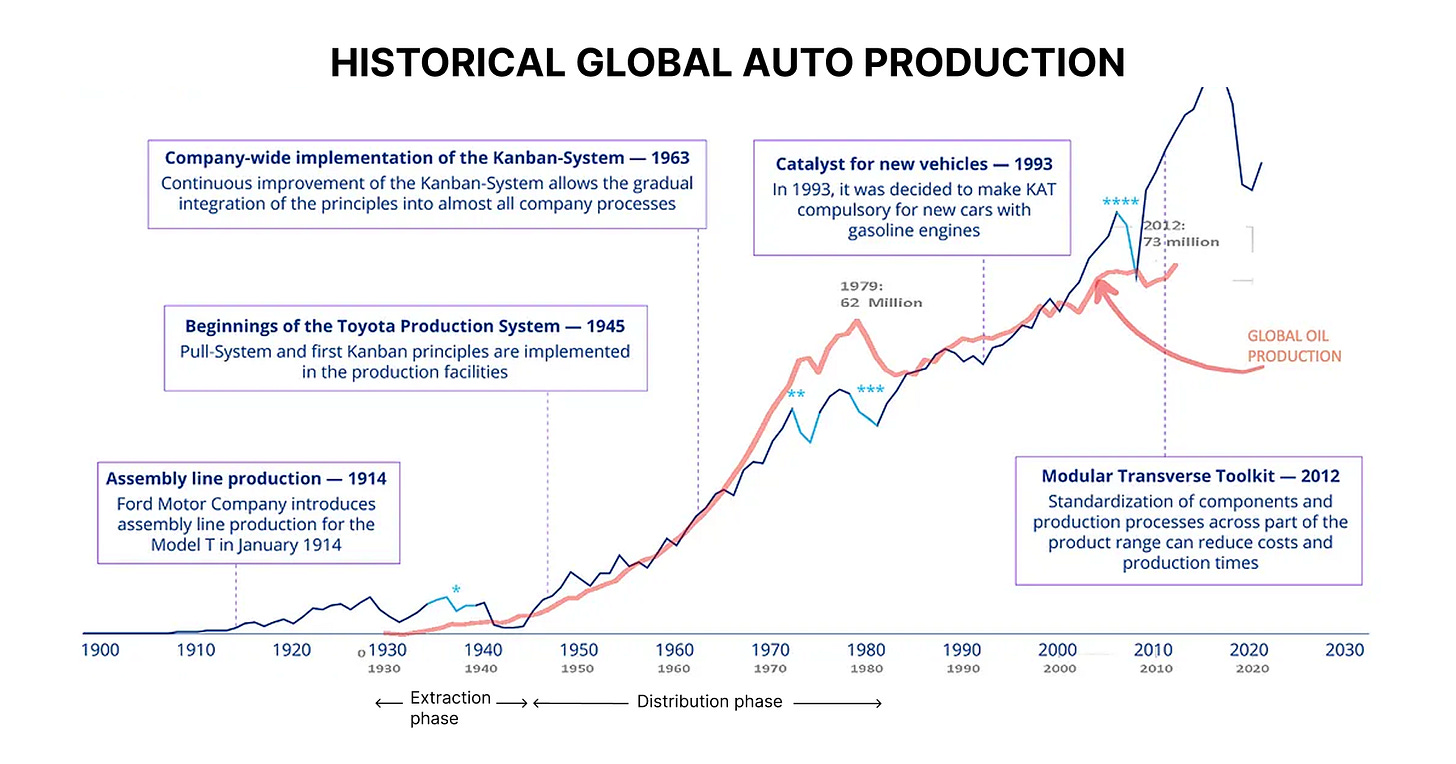

Every disruptive innovation has an extraction and distribution phase. Automobiles had one, and the internet had one, too. In the automobile industry, the extraction phase began in the 1920s, as companies sought the best way to drill for oil, and continued until the 1940s, fueled by World War I.

Post-1940s, as oil became more ubiquitous, manufacturers' focus shifted to developing technologies to use it. Companies competed on features such as engine capacity and vehicle customisation until the 1980s.

AI is following a similar trend.

First, chip manufacturers (NVIDIA, Intel, AMD) from the internet age optimised hardware for AI. Then came the foundational model companies (OpenAI, Anthropic, Deepseek) that optimise for compute. This leaves a massive untapped opportunity to build the AI application layer (tools/ UIs).

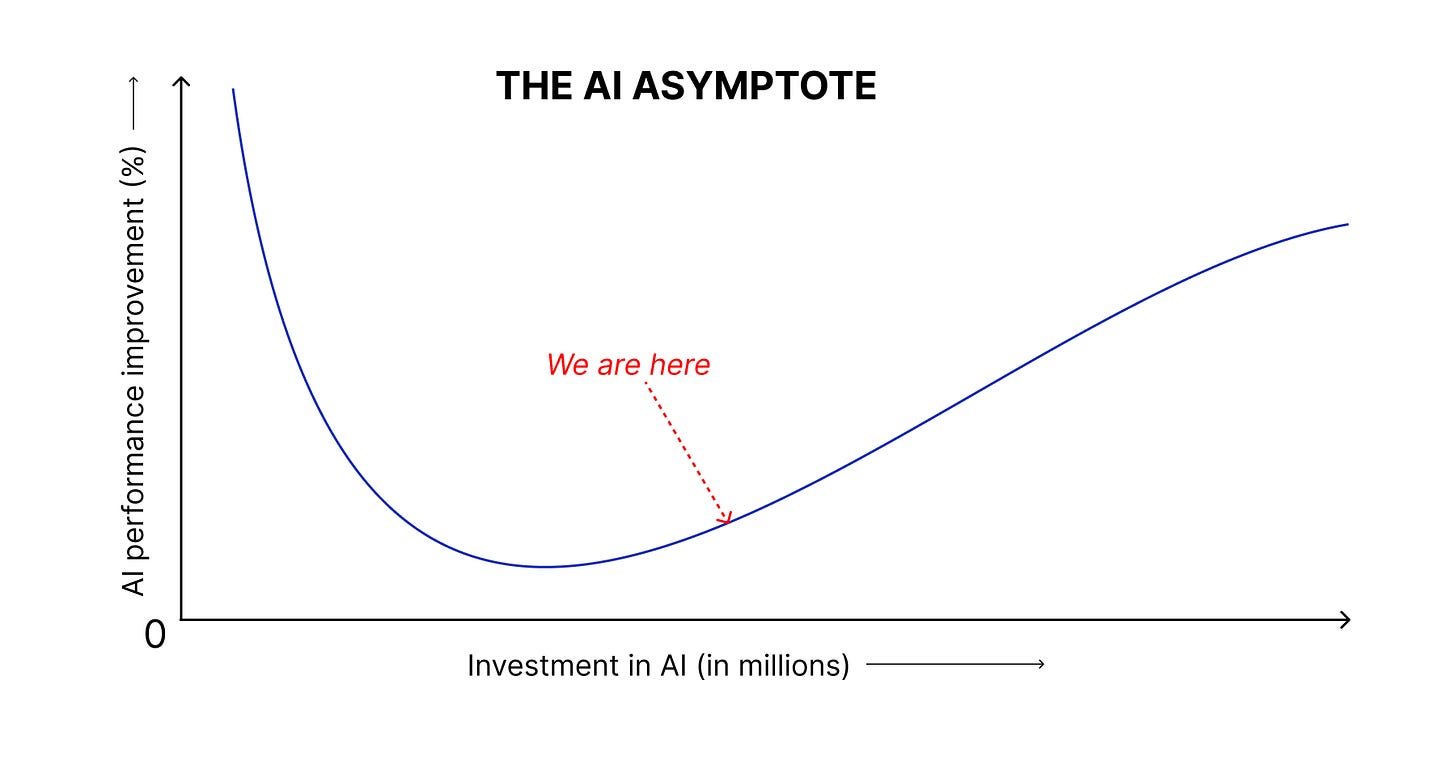

Second, diminishing returns.

Both chip manufacturing and algorithm R&D require massive capital and research resources. Add to this, we’re already past the sweet spot of effort and results.

“For instance, $10B could have improved your performance by 5% years ago. Now, $10b might give you 1% performance improvement right now,” Hemant says.

So, how should Indian AI founders build?

First, skip the middleware.

Otherwise, OpenAIs and popular customer applications worldwide could eat you up. The reason is simple — when you build middleware, you’re building a tool to connect foundational LLMs (Like OpenAI) to applications (like Riverside to generate video subtitles). Builders working on such tools face a significant threat —

Consolidation.

OpenAI (LLM) and customer-facing software applications (Riverside) want to expand their functionality quickly for their users. Without a solid reason to rely on your tool to handle parts of the process, users are more likely to either build their own tools or collaborate to avoid incurring extra costs.

JasperAI started as an AI copywriting assistant in 2021. As soon as models like GPT-4 entered the market, tools like Notion integrated similar features natively, commoditising standalone generators. Users also started prompting ChatGPT to evaluate their copy. Result? Jasper pivoted to an AI workflow tool for marketers.

Second, build vertical software or lose your advantage.

Take the example of Harvey, a domain-specific AI for law firms. OpenAI might not be building AI for law firms (just yet) — it's too small a market for them to go after (yet). So, Harvey can grab this market by ensuring they are doing the “collecting user data → retraining the model → repeat” process & going through hundreds of product iterations.

The same is true for existing scaled products like HubSpot. By integrating AI right inside, it is much better protected against any AI copywriting tool designed for marketers.

Third, hedge on data diversity.

OpenAI or Anthropic can’t access that yet. AI founders can pull three levers to succeed — infrastructure, algorithms, and data. Big players dominate the infrastructure & algorithms and depend almost exclusively on publicly available internet data or self-reported user data. This gives Indian AI players, like SarvamAI, the unique advantage of data diversity and scale of 150 Crore people. A few interesting opportunities —

1. Revenue cycle management/regulatory compliance models

60% of the total healthcare BPO revenue belongs to India. This is due to low labour costs & specialised talent that has generated a readiness to stretch their working hours. Meaning, India has access to millions of records (training data) of medical coding, billing, patient communications, claims processing, and collection data from call recordings.

2. Videogen models

~64,000 movies have been made in India since the 1900s. These could serve as training data for complex hand movements, language, and cultural narratives.

3. Sovereign health models

Hospital records, health surveys, and government research data are banks of unique phenotypic (think medical history), demographic, and genetic data that could train sovereign AI models for drug discovery and disease. If existing data is insufficient, AI companies can create synthetic data to produce richer training datasets. Companies like SarvamAI are already doing this.

The idea is to forget cloning; we should reclaim AI independence.

We can’t build AI tools going toe-to-toe with giants by paying them rent. To Hemant, being self-reliant is the only way ahead. To do this, he suggests a few paths —

Controlling the end-to-end infrastructure.

Most AI chips are designed in the US, manufactured in Taiwan, and assembled in China, while our major foundational LLMs like GPT-4, Gemini, and Llama come from the US. Having complete sovereignty over the underlying infrastructure can protect our apps from collapsing in the event of geopolitical tension. MeitY is already doing this through the $1.25B India AI mission.Investing in R&D

India’s R&D index currently stands at only 0.7% of the GDP, which might be responsible for AI researcher brain drain. India must aim to increase its R&D index to 3-5% to build good models. China is already doing this with the Thousand Mind Project, which provided top technical talent with labs, capital, and 10-15 years of R&D to produce significant results.